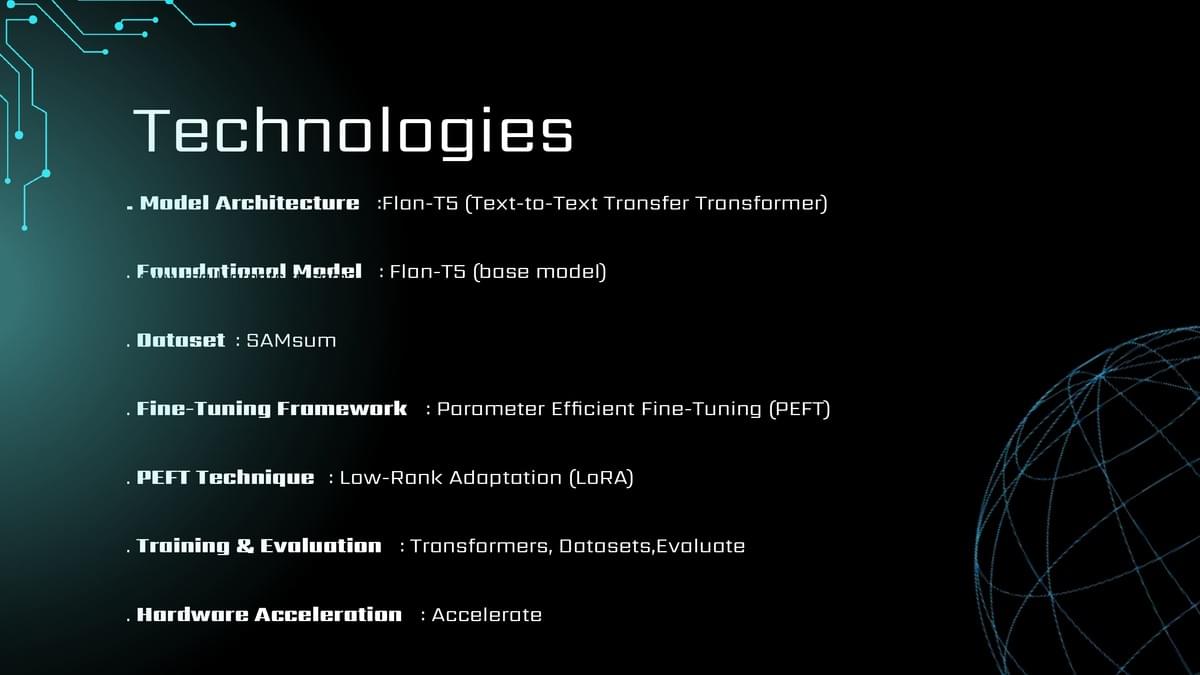

This project demonstrates how to fine-tune the Flan-T5 base model using LoRA (Low-Rank Adaptation) to generate summaries from dialogue text.

The project guides you through:

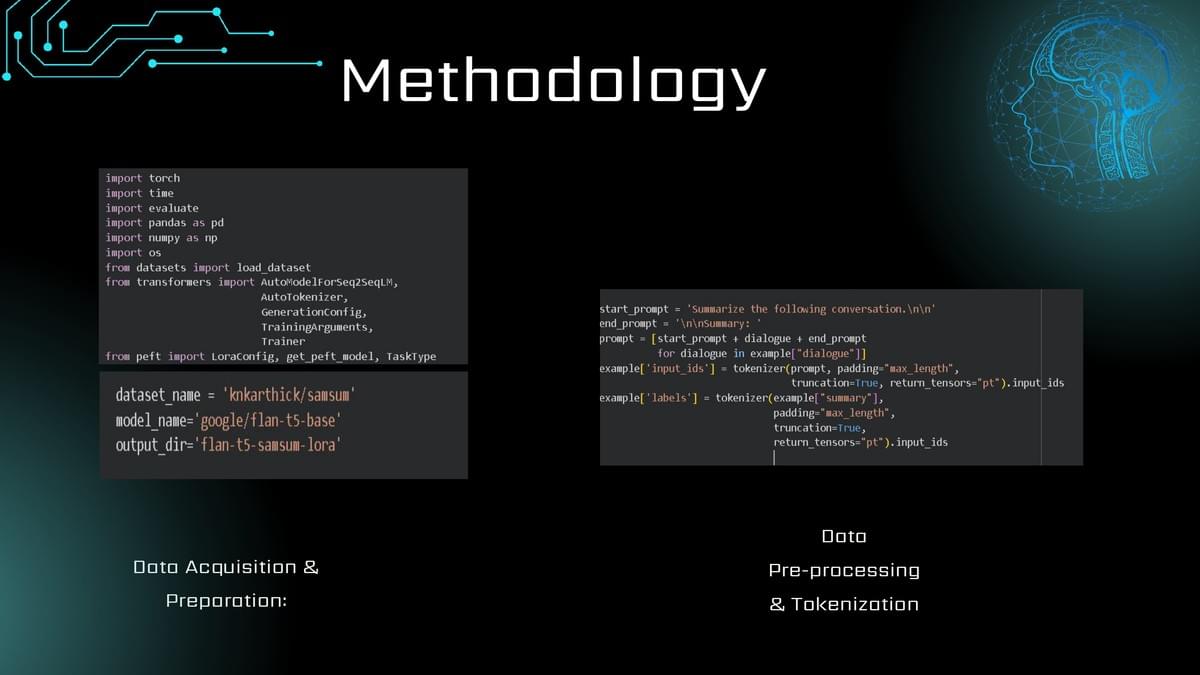

Loading and exploring SAMsum dataset

Tokenizing inputs and preparing labels

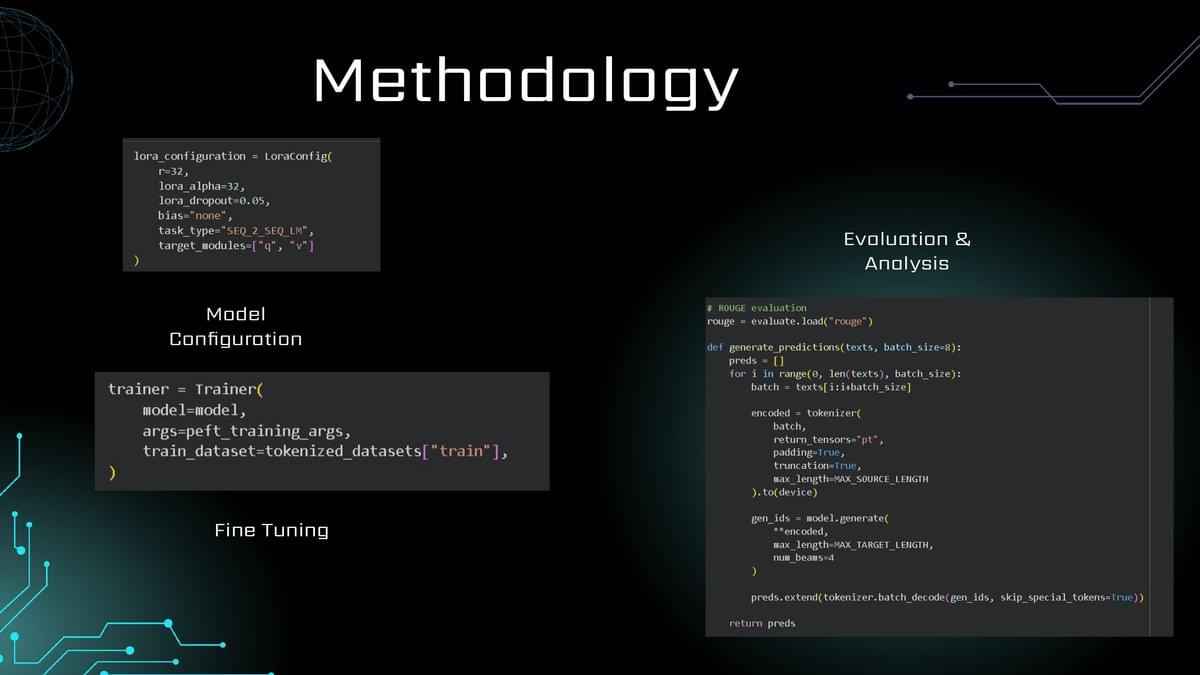

Applying PEFT + LoRA to reduce memory and computation cost

Training the model using HuggingFace Trainer

Evaluating the generated summaries using ROUGE